The internet is no longer a place where visual evidence speaks for itself. Images and videos can now be generated, edited, and manipulated at a level that feels emotionally real—even when the events never happened. As generative AI becomes part of everyday content creation, a new question quietly replaces an old assumption: not “Is this real?” but “How do we know how this was made?”

This is where Content Credentials, often referred to as C2PA, enter the picture. Rather than acting as a truth detector, Content Credentials provide something more foundational: a verifiable history of how digital media was created and modified. This article explains C2PA from a practical perspective—how it works, what it can and cannot do, and how readers, creators, and publishers can use it to build smarter trust online.

What Are Content Credentials (C2PA)?

Content Credentials are cryptographically signed metadata embedded into images and videos that record their origin and editing history. The standard is developed by the Coalition for Content Provenance and Authenticity (C2PA), a collaboration between organizations such as Adobe, Microsoft, Google, and major media institutions.

Instead of labeling content as “real” or “fake,” C2PA focuses on provenance. It answers questions such as:

- Who created this content?

- What tool or device was used?

- Was AI involved in generation or editing?

- What changes occurred after creation?

This approach avoids subjective judgment. Provenance does not claim truth—it documents process. The official technical specification is available at: https://c2pa.org/specifications/

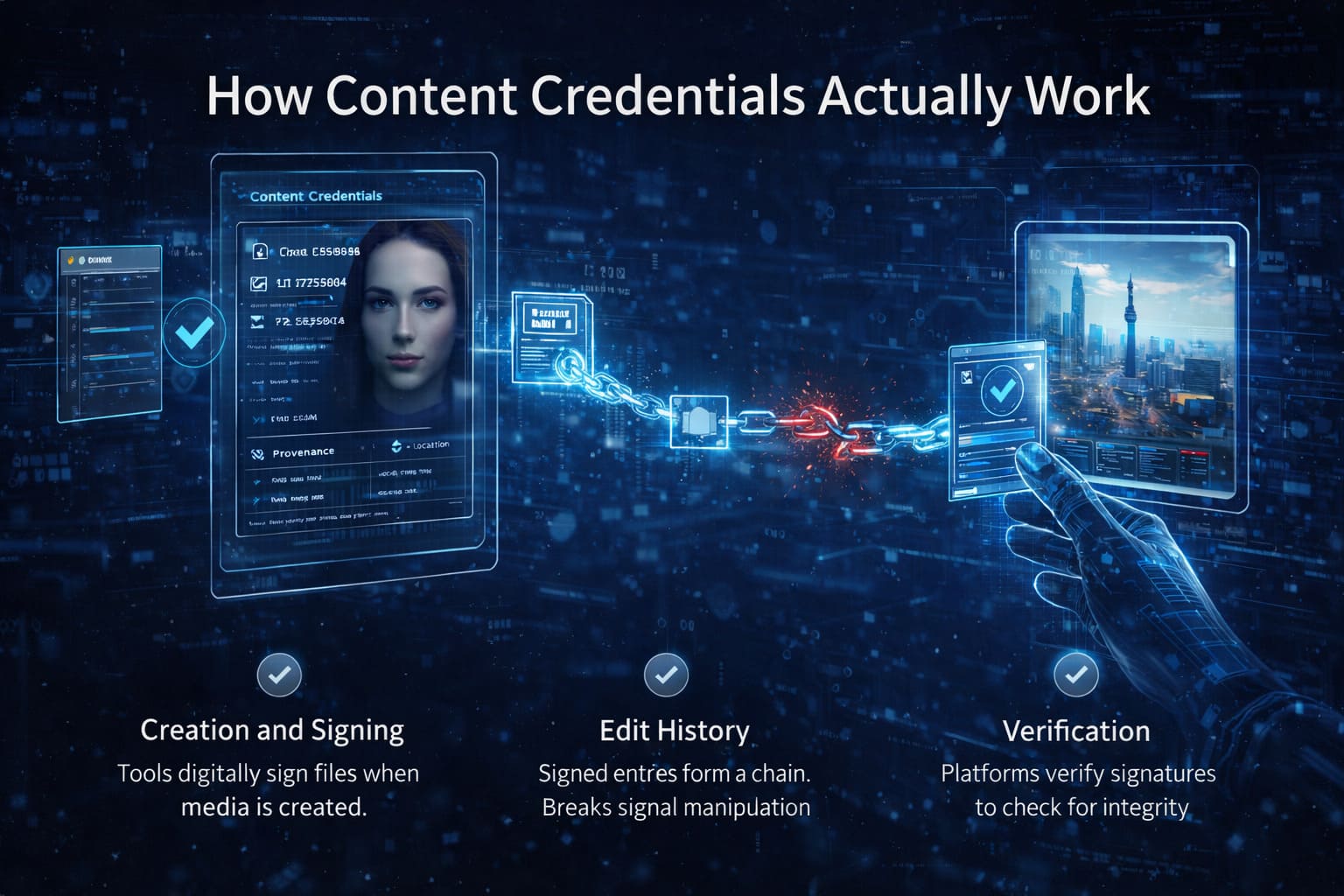

How Content Credentials Actually Work

Conceptually, Content Credentials function like a tamper-evident audit trail attached to a media file.

Creation and Signing

When content is created using a supported camera, editing application, or AI system, the tool digitally signs the file. This signature links the media to a cryptographic identity and records the initial creation context.

Edit History

Each subsequent edit—if performed in a supported environment—adds a new signed entry. These entries form a chain. If the file is altered outside the system, the chain breaks.

Verification

When a viewer inspects the content using a compatible platform, the system checks the signatures. If they remain intact, the provenance can be trusted as unmodified.

It is important to understand that Content Credentials do not prevent manipulation. They make manipulation visible.

Why Content Credentials Are Becoming Essential

The rapid adoption of C2PA is not driven by novelty. It is a response to structural changes in how content is created and consumed.

Generative AI Has Scaled Visual Persuasion

Text-to-image and text-to-video tools have made realistic visuals accessible to anyone. Visual authority is no longer a reliable signal.

Platforms Need Context, Not Just Content

Search engines and social platforms are shifting toward surfacing context. Google’s “About this image” feature explicitly supports provenance metadata: https://blog.google/technology/ai/google-gen-ai-content-transparency-c2pa/

Trust Is Becoming a Competitive Advantage

For creators, publishers, and brands, transparent content history increasingly differentiates credible sources from noise.

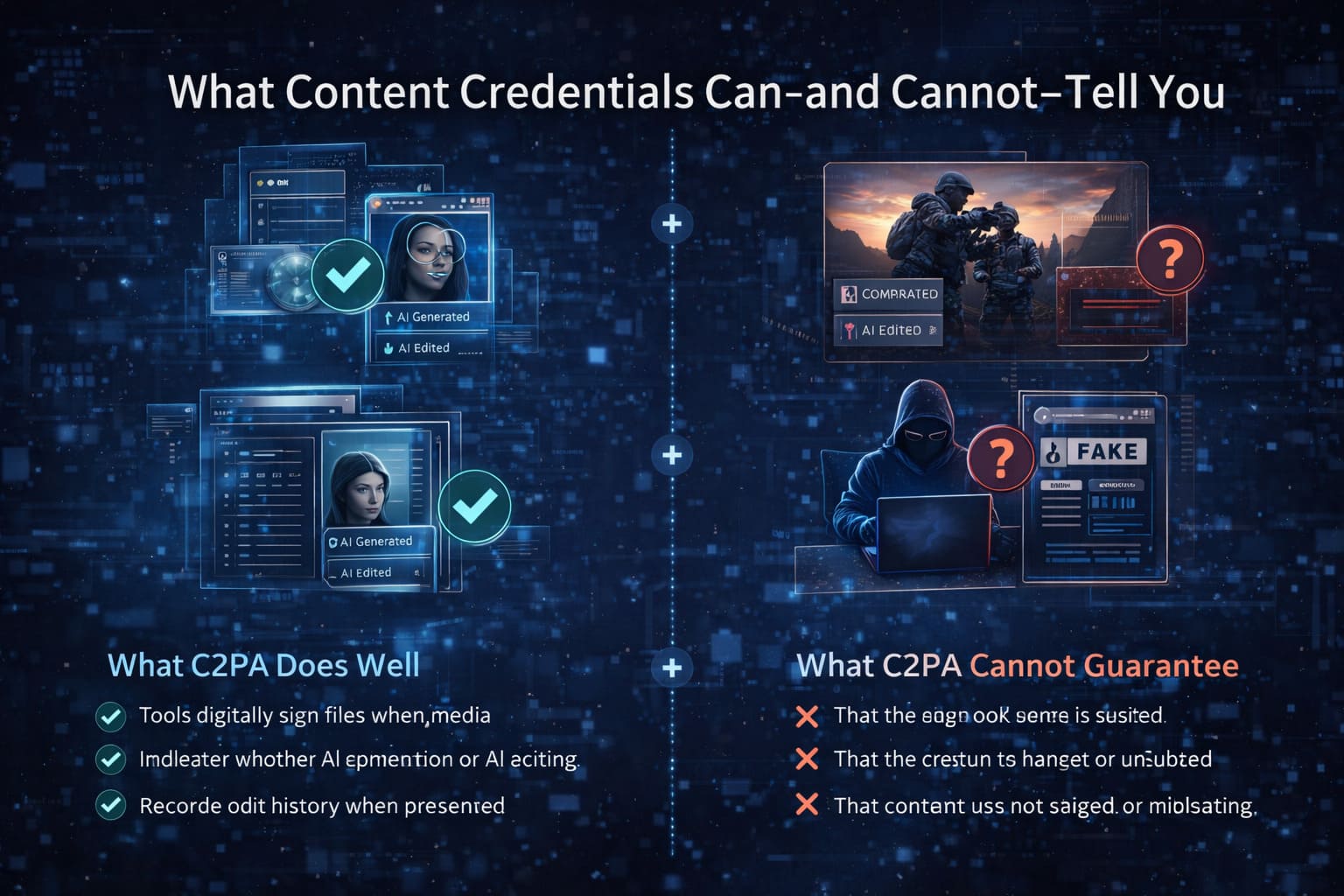

What Content Credentials Can—and Cannot—Tell You

What C2PA Does Well

- Identifies supported creation tools or devices

- Indicates whether AI generation or AI editing was used

- Records edit history when preserved

- Detects unauthorized modification of signed data

What C2PA Cannot Guarantee

- That the depicted event is factual

- That the creator is honest or unbiased

- That content was not staged or misleading

This distinction matters. Content Credentials provide transparency—not truth.

Why Provenance Is More Reliable Than AI Detection

AI detection tools attempt to guess whether content is synthetic. This approach has fundamental limits.

Why Detection Struggles

- Detection is probabilistic, not definitive

- AI models evolve faster than detectors

- Compression, cropping, and re-encoding reduce accuracy

Provenance shifts the problem. Instead of guessing after the fact, it records the process at creation time—when possible.

This mirrors core cybersecurity principles seen in API logging and system auditing, where prevention and traceability scale better than pure detection: https://bytetolife.com/api-security-hidden-data-connections/

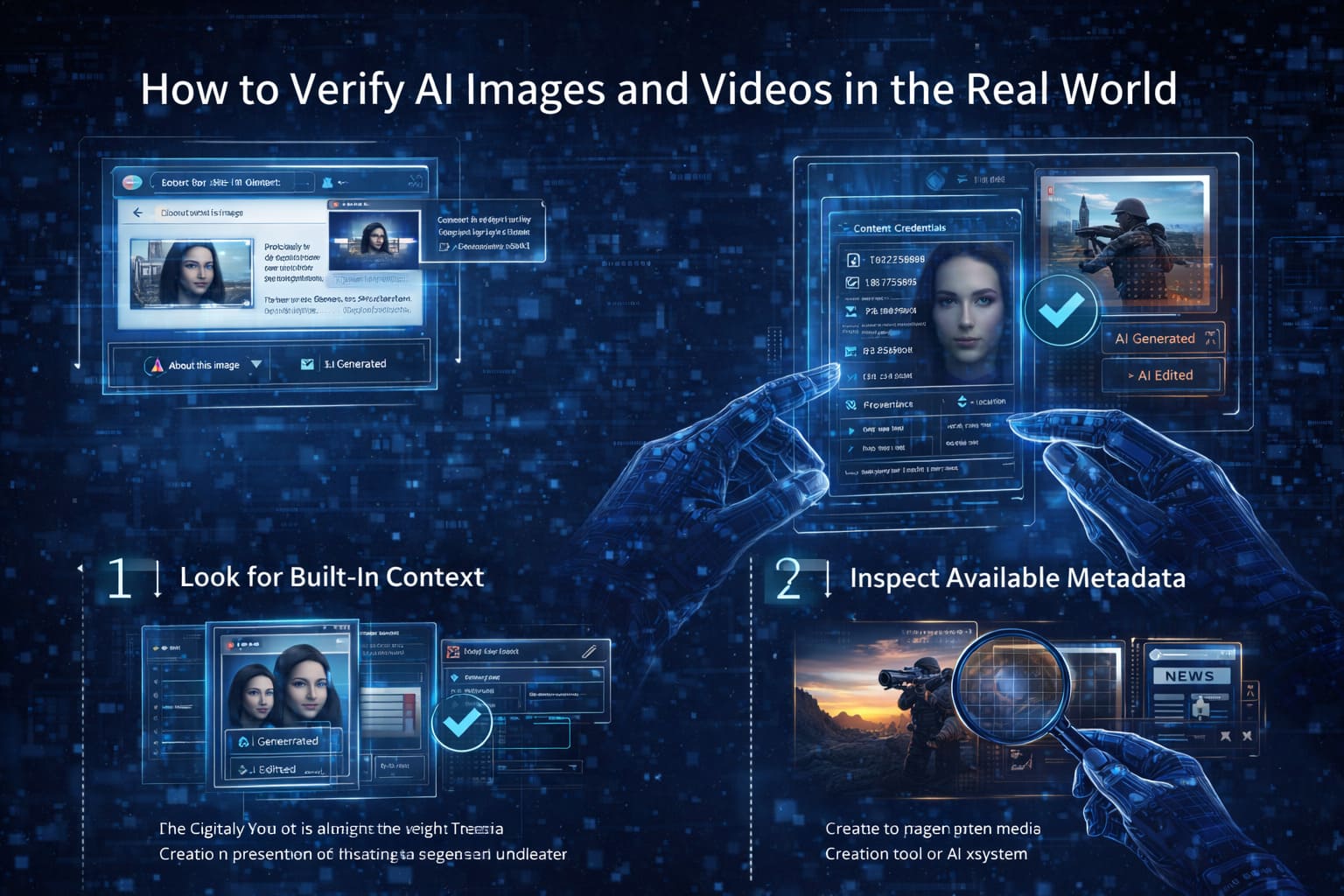

How to Verify AI Images and Videos in the Real World

Verifying content does not require advanced tools if you follow a layered approach.

Step 1: Look for Built-In Context

- Use Google Search’s “About this image” feature

- Check for provenance indicators on supported platforms

- Look for Content Credentials in Adobe-enabled media

Step 2: Inspect Available Metadata

If credentials are present, review:

- Creator or organization name

- Creation tool or AI system

- Declared AI involvement

Step 3: Apply Human Judgment

Technical signals should be combined with:

- Reverse image searches

- Source credibility checks

- Logical consistency with known facts

This mindset aligns with broader digital security practices discussed in: https://bytetolife.com/7-digital-security-tips-to-keep-your-personal-data-safe/

Common Mistakes People Make

- Equating credentials with truth — provenance shows history, not accuracy.

- Distrusting content without credentials — many tools still lack support.

- Sharing screenshots of sensitive visuals — screenshots remove provenance entirely.

- Overreliance on AI detection labels — these are advisory, not definitive.

Practical Observations / Real-World Patterns

- Viral content often loses credentials after multiple re-uploads.

- Creators who consistently preserve metadata build stronger long-term trust.

- Scammers frequently bypass provenance via screen recording.

- Search platforms increasingly surface context rather than raw visuals.

These patterns reinforce that trust is cumulative, not binary.

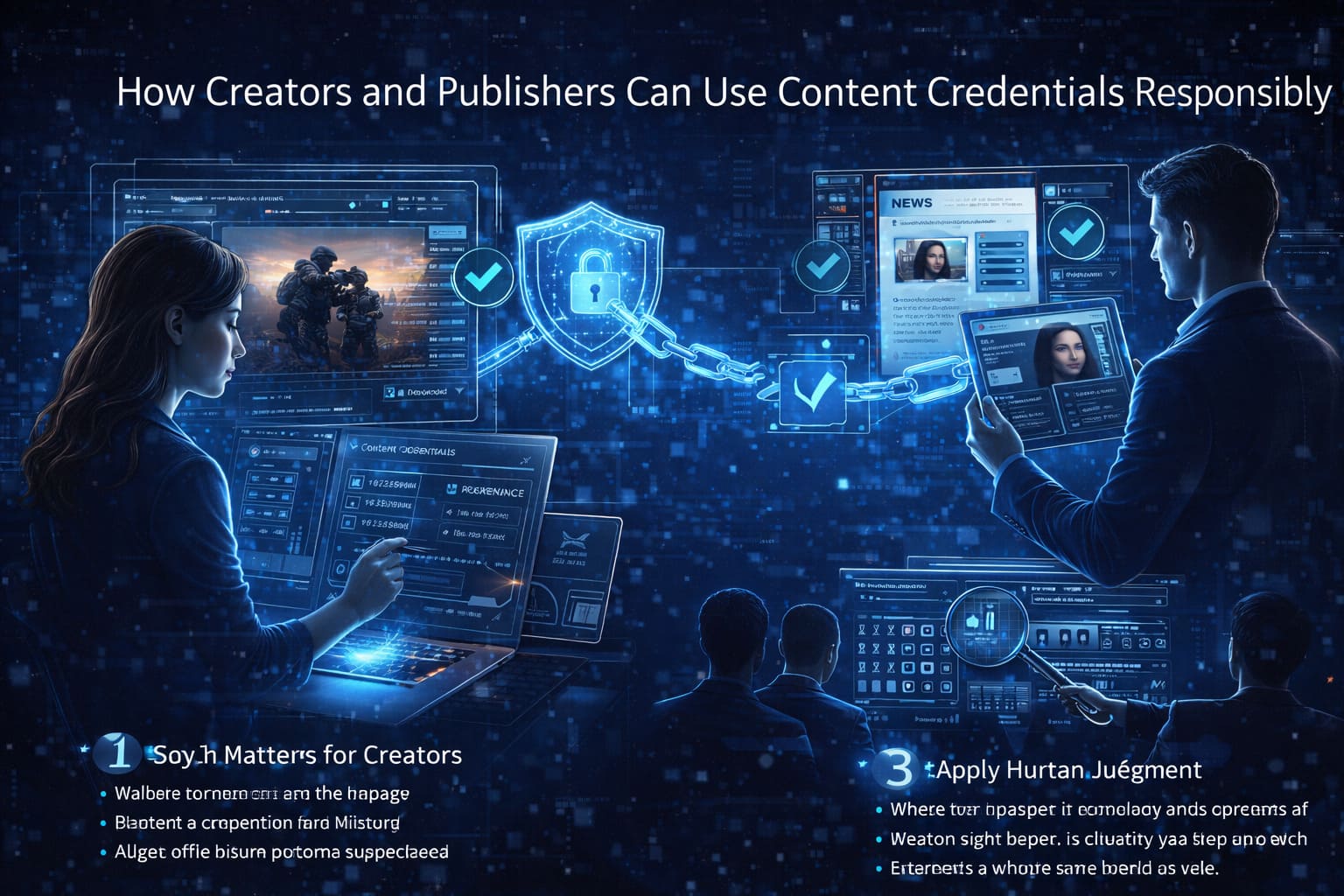

How Creators and Publishers Can Use Content Credentials Responsibly

Why It Matters for Creators

- Protects attribution and authorship

- Signals transparency to audiences

- Aligns with future platform expectations

Practical Workflow Guidance

- Use creation and editing tools that support C2PA

- Avoid stripping metadata during export

- Educate your audience on how to verify your content

For ethical AI-assisted publishing, see: https://bytetolife.com/ethics-of-ai-generated-content/

Actionable Checklist: Smarter Content Verification

- Look for Content Credentials before sharing sensitive media

- Treat provenance as a trust signal, not proof

- Avoid resharing screenshots when context matters

- Preserve metadata when publishing original work

- Combine technical signals with critical thinking

Trust Is Becoming a Digital Skill

Content Credentials are not a silver bullet. They are infrastructure—powerful, limited, and most effective when understood properly.

As AI-generated media becomes normal, trust shifts from passive belief to active evaluation. Learning how to interpret provenance, question sources, and recognize manipulation patterns is now part of modern digital literacy.

In a world where content can be generated instantly, credibility is built deliberately.

To explore how AI tools are reshaping everyday workflows responsibly, continue with: https://bytetolife.com/ai-tools-transforming-everyday-productivity/

Content Credentials in High-Risk Scenarios: Where They Matter Most

While Content Credentials are useful for everyday content verification, their real value becomes visible in high-risk scenarios—situations where misinformation, manipulation, or misplaced trust can cause real harm. Understanding these contexts helps readers apply C2PA more strategically, not blindly.

Breaking News and Crisis Imagery

During breaking news events, images and videos often spread faster than verification can occur. In these moments, Content Credentials can act as an early signal—not to confirm truth, but to indicate whether the content comes from an established workflow or an anonymous, unverifiable source.

Journalists increasingly treat provenance as a filtering layer: content with verifiable creation history is prioritized for further investigation, while media without context is handled cautiously. This does not eliminate misinformation, but it slows its amplification.

Scams, Hoaxes, and Emotional Manipulation

Many modern scams rely on emotionally charged visuals: fake disaster images, fabricated screenshots, or manipulated videos designed to trigger urgency. In practice, these materials almost never carry intact Content Credentials.

Scammers avoid provenance because it limits plausible deniability. As a result, the absence of credentials—when combined with emotional framing—is often a meaningful warning signal.

This pattern aligns with broader scam mechanics explored in: https://bytetolife.com/protect-against-ai-voice-scams-and-deepfake-vishing/

Content Credentials and SEO: Why Provenance Matters for Publishers

For publishers and site owners, Content Credentials are not just a trust feature—they are a long-term visibility signal.

Search Engines Favor Context

Search engines increasingly emphasize context, authorship, and credibility. While C2PA is not a direct ranking factor, it supports the broader signals that search systems reward: transparency, consistency, and accountability.

When images surface in search results, provenance metadata can appear as additional context, helping users decide whether to trust and click. Over time, this reinforces brand credibility.

Reducing Misinformation Risk

Publishers who consistently attach provenance reduce the risk of their content being misused, misattributed, or taken out of context. This is especially relevant for evergreen educational content, where longevity increases exposure.

For AI-driven publishing workflows, this complements responsible tool usage discussed in: https://bytetolife.com/ai-writing-tools/

Advanced Misconceptions About Content Credentials

As awareness grows, more nuanced misunderstandings emerge.

- “Credentials mean the content is ethical” — ethics are human decisions, not metadata.

- “AI-generated content always has credentials” — only supported tools add them.

- “No credentials equals fake” — legacy media and older files often lack support.

- “Credentials stop deepfakes” — they expose gaps, not eliminate threats.

Understanding these limits prevents overconfidence, which is one of the most dangerous failure modes in digital trust systems.

Actionable Checklist: Reader Mode vs Creator Mode

For Readers

- Pause before sharing emotionally charged visuals

- Check for Content Credentials when context matters

- Treat missing provenance as a signal, not proof

- Combine provenance with source evaluation

For Creators and Publishers

- Use tools that support Content Credentials by default

- Preserve metadata throughout the publishing workflow

- Avoid exporting settings that strip provenance

- Educate audiences on how to verify your content

The Long-Term Reality: Trust Is No Longer Automatic

Content Credentials represent a shift in how trust is constructed online. Instead of assuming authenticity, users increasingly evaluate signals, patterns, and provenance.

This shift does not make the internet safer by default. It makes informed skepticism more scalable.

In the long run, the most resilient digital environments will not be those that claim absolute truth, but those that make history, context, and process visible. Content Credentials are one piece of that foundation—useful, imperfect, and increasingly necessary.

For readers navigating AI-driven information ecosystems, trust is no longer something you receive. It is something you actively build.

Frequently Asked Questions (FAQ)

Content Credentials are embedded metadata that show how an image or video was created and edited. Instead of deciding whether content is “real” or “fake,” they provide transparent information about its origin, tools used, and whether AI played a role.

No. Content Credentials do not verify truth or accuracy. They document the creation process and edit history, helping viewers understand how content was made, not whether the depicted event actually happened.

Many tools and platforms do not yet support C2PA, and some workflows remove metadata during export or re-upload. Missing credentials do not automatically mean content is fake.

Content Credentials do not stop deepfakes, but they make manipulation easier to detect when supported. Scammers often avoid provenance by using screenshots or screen recordings, which remove credentials entirely.

They solve different problems. AI detection tries to guess whether content is synthetic, while Content Credentials record how content was created. Provenance is more reliable when available, but it works best alongside human judgment.

Yes, especially for educational, journalistic, or evergreen content. Preserving provenance helps protect attribution, build long-term trust, and align with platform expectations around transparency.

Conclusion: Choosing to Pause

Not long ago, seeing felt like believing. Today, that instinct is being challenged. Images can be created without moments, and videos without people. In this new reality, Content Credentials do not give us absolute certainty—but they give us something just as important: a reason to pause.

That pause is where trust is rebuilt. When we slow down to understand how content was made, we stop reacting and start thinking. The future of the internet will not belong to the fastest voices, but to those willing to question, reflect, and protect what shared reality still means.

Superb post but I was wanting to know if you could write a litte more on this subject? I’d be very thankful if you could elaborate a little bit more. Cheers!

Hi Nicolas, thanks for the thoughtful feedback!

You’re right — this topic deserves deeper exploration. One key area worth expanding is how C2PA credentials can be combined with platform-level verification and user education to build real trust, not just technical proof.

We’re planning a follow-up section that dives deeper into real-world adoption challenges and limitations, especially for everyday creators and publishers.

Really appreciate you taking the time to share this — stay tuned for updates!