Artificial Intelligence (AI) is revolutionizing content creation. From automated blog posts and marketing emails to entire novels and songs, machines are now capable of producing content that closely mimics human output. While the possibilities are exciting, they raise a critical question: what are the ethical implications of AI-generated content?

In this article, we’ll explore the core ethical concerns surrounding AI-generated content, including authorship, misinformation, bias, copyright, and the future of creative jobs. We’ll also highlight best practices and offer a balanced perspective for creators, developers, and consumers alike.

What Is AI-Generated Content?

Before diving into the ethics, let’s clarify what AI-generated content actually means. It refers to any form of media—text, audio, images, or video—that is produced by an artificial intelligence system. These systems use machine learning models trained on large datasets to generate human-like outputs.

Popular tools include:

- ChatGPT by OpenAI for text generation

- DALL·E and Midjourney for images

- Suno and Udio for AI-generated music

- Runway and Pika for video content

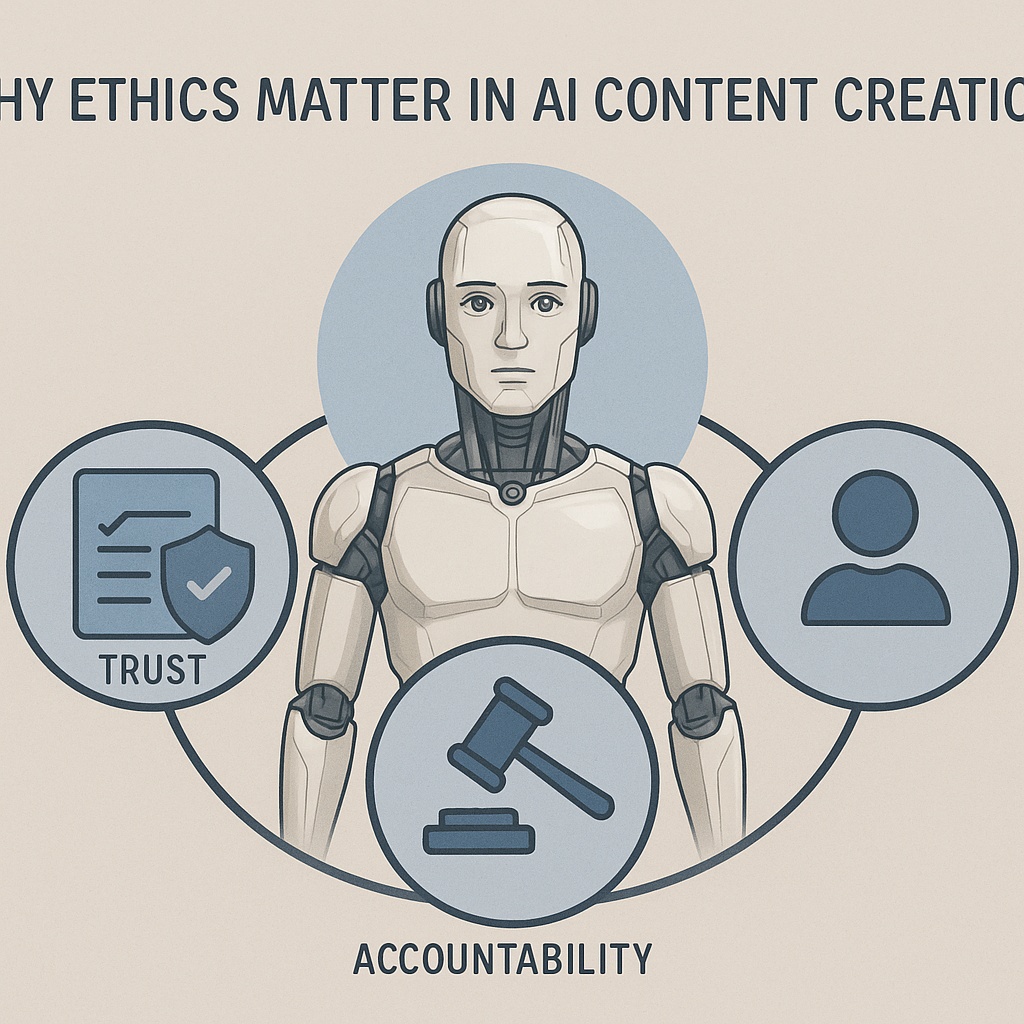

Why Ethics Matter in AI Content Creation

Ethics serve as a compass in any transformative technology. With AI, the stakes are high: the content produced can shape opinions, influence behavior, and impact livelihoods. Here’s why ethics should be central to every discussion about AI-generated content:

- Trust: Can users trust content if they don’t know it’s AI-generated?

- Transparency: Should creators disclose AI involvement?

- Accountability: Who is responsible if content causes harm?

1. Authorship and Attribution

One of the most debated topics is who gets credit for AI-generated content. When a writer drafts with AI or a musician composes with algorithms, who truly owns the creation?

Key questions:

- Should the AI model be credited?

- Is the person behind the prompt the true creator?

- Is co-authorship between human and AI possible?

While there’s no universal answer yet, many experts advocate for human attribution, especially when there’s significant human direction or editing involved.

Best practice: Always disclose AI assistance where applicable, especially in academic, journalistic, or professional contexts.

2. Misinformation and Deepfakes

AI can generate highly convincing but entirely false information. Fake news articles, fabricated quotes, and deepfake videos can be created with minimal effort, making it harder to distinguish truth from fiction.

Ethical concerns:

- Misinformation can mislead the public and influence elections or markets.

- Deepfakes may be used for defamation, revenge, or manipulation.

- Synthetic news could flood the internet with low-quality, automated stories.

Example: In 2023, AI-generated images of Pope Francis wearing a puffer jacket went viral, showing how realistic and misleading content can be.

Solutions:

- Platforms like NewsGuard and AI content detectors help identify such content.

- Creators must consider the impact and intention behind every AI-generated piece.

3. Bias in AI Models

AI systems train on data that’s often laced with human bias. This means AI-generated content can perpetuate stereotypes, discrimination, or political agendas unintentionally.

Examples of bias:

- Language models generating sexist or racist stereotypes in writing prompts

- Image generators producing white-centric results when asked for professional people

- Music tools trained on Western music patterns, ignoring global diversity

Solution: Developers must train models on diverse, inclusive datasets and allow user feedback loops to reduce harmful biases.

Explore how OpenAI is dedicated to AI alignment and safety.

4. Copyright and Originality

AI models are trained on massive datasets, often scraping content from the internet—including copyrighted material. This raises thorny questions:

- Can AI content be copyrighted?

- Are AI-generated outputs considered “original”?

- Is training on copyrighted data a violation?

Legal gray areas:

- As of now, most countries don’t grant copyright protection to AI-generated works without human authorship.

- Several lawsuits have already been filed against AI companies for allegedly using copyrighted datasets without permission.

Tip for content creators: Use royalty-free assets and verify that your AI tool complies with licensing laws. Platforms like Pexels and Pixabay offer safe content libraries.

5. Impact on Creative Jobs

One of the most practical ethical concerns is how AI affects writers, designers, musicians, and other creatives. x

Two perspectives:

- Optimistic view: AI is a tool that augments human talent, speeds up workflows, and democratizes creativity.

- Pessimistic view: AI devalues human labor, reduces job opportunities, and floods markets with mediocre, automated content.

Real-world example: Marketing teams now use AI to write product descriptions or generate ad copy, reducing the need for entry-level content writers.

Mitigation strategies:

- Promote reskilling and upskilling in creative tech tools

- Advocate for AI-literate education in schools and universities

- Support human-AI collaboration models, not replacement

6. Transparency and Disclosure

Audiences have a right to know when content is AI-generated—especially when it influences opinions, purchases, or political beliefs.

Guidelines:

- Use disclaimers like “This article was partially generated using AI”

- In academic or research settings, cite AI tools as you would any source

- For marketing, ensure compliance with consumer protection regulations

Example: Google’s SEO guidelines now emphasize E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness), including transparency about AI involvement.

Best Practices for Ethical AI Content Creation

To navigate the complex landscape of AI-generated content, follow these best practices:

- Disclose AI usage – Always let your audience know when AI tools are involved.

- Check for factual accuracy – AI can “hallucinate” false information. Always verify with credible sources.

- Avoid plagiarism – Don’t pass off AI content as your original work without significant editing.

- Be mindful of bias – Use inclusive prompts and review outputs for stereotyping or exclusion.

- Respect copyright – Use tools that avoid copyrighted data or provide appropriate licenses.

The Future: Toward Ethical AI Content Standards

As AI becomes deeply embedded in content creation, there’s an urgent need for industry-wide ethical standards. Organizations, governments, and tech companies must work together to:

- Develop AI usage guidelines

- Establish regulatory frameworks

- Create certification programs for ethical AI tools

Some encouraging developments include:

- The Partnership on AI is leading the way in promoting ethical AI development.

- Open-source frameworks promoting explainability and auditability

- Increased public pressure on tech giants for transparency and fairness

Final Thoughts

AI-generated content is here to stay. It’s reshaping how we write, design, communicate, and consume information. But with great power comes great responsibility.

By approaching AI content ethically—disclosing its use, ensuring accuracy, respecting copyrights, and staying aware of bias—we can harness its potential without sacrificing human values.

Let’s create a future where AI empowers creativity rather than replaces it, and where innovation is always aligned with integrity.